Paradise ponders: router table and sushi edition... Per a reader's request, a photo (at right) of our new oven, installed. Above it is the microwave I installed a couple months ago. Note the walnut trim strip between the microwave and the oven – to our surprise, I didn't need to modify that strip to accommodate the new oven. The old oven and the new oven are less than 1/16" different in height!

Well, the remaining parts for the router table were delivered on Friday afternoon, as planned. Yesterday morning I used them to completely finish the router table (at left). Most of this work was trivially easy, but one part was ridiculously hard. In the photo you can see a rectangular shaped plate with a circular hole in the middle through which the router spindle projects. That plate is cast iron (same as the rest of the table), but it sits on four bolts in the corners that are used to adjust its height. The idea is that you need to match – exactly – the height of the surrounding table. Well, that's easier said than done! I found myself making adjustments of as small as a tenth turn on those bolts. They're extremely awkward to reach, from through a small door in the enclosure underneath the table top. It took me nearly two hours to get them perfect enough to make me happy. Those bolts have jam nuts on them, but I hope they don't move! The crank handle at the left top engages with a nut visible just to the right of the router spindle. Turning that nut slowly raises and lowers the router assembly. This is a very nice way of setting the height of the bit. Overall I'm very pleased with this router table: it's solid, has an absolutely flat and smooth table, the fence is very nicely done, and the router lifter is definitely the cat's meow. I've only used it on a few test pieces so far, but worked beautifully on those...

Yesterday afternoon we went out to celebrate two things: me finishing the router table (what a kit that was!) and Debbie doing her first day of good exercise since her most recent surgery. Debbie had a small beer and a noodle dish she managed to eat entirely. There was a lot of happy noises and smiles from her side of the table. I had the sashimi at right (hamachi, sake, maguro, and white "tuna" (escolar). All delicious! I also had a rainbow roll with the same four fish. I am so glad we found out about sushi at Black Pearl, as it's by far the best in our area (at least, the best of all those I've discovered!).

Sunday, April 30, 2017

A wall around California...

A wall around California... I suspect the majority of the population of the Mountain States would think this was a very good idea!

Saturday, April 29, 2017

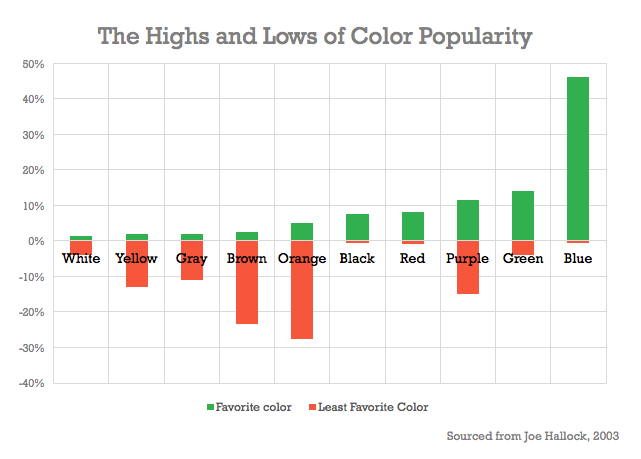

Favorite colors...

Favorite colors... It would never have occurred to me to study the question of what colors are people's favorites. Nor would I have expected that there would be significant differences in the popularity of various colors. But there apparently are:

My own favorite color? Well, I'd have a hard time choosing between bright orange (like a California poppy) or bright yellow (like a nice lemon). Maybe a slight preference to the orange. That puts me solidly in the weirdo category, it seems. One of my friends likes to call me “six sigmas” because I'm at least six standard deviations from normal (though I claim eight!). This seems to be yet another dimension in which I deviate... :)

My own favorite color? Well, I'd have a hard time choosing between bright orange (like a California poppy) or bright yellow (like a nice lemon). Maybe a slight preference to the orange. That puts me solidly in the weirdo category, it seems. One of my friends likes to call me “six sigmas” because I'm at least six standard deviations from normal (though I claim eight!). This seems to be yet another dimension in which I deviate... :)

Paradise ponders: Utah gratitude edition...

Paradise ponders: Utah gratitude edition... I fled to Utah from California just three years ago, after living in that sunny land for 43 years. When I first came to California, in 1971, I was coming from New Jersey. The California of 1971 was a paradise by comparison to the hell-on-earth that was New Jersey. Back then, even California's government was better.

It was all downhill from there. By the time we retired in 2013, it was obvious to Debbie and I that our long-planned retirement in the mountains of San Diego wasn't going to be the dream we'd thought it would be. We made up our minds to leave, and we chose Utah for many reasons.

It turns out, though, that we'd underestimated (by a lot!) just how well we'd fit in here in Utah. We'd expected the natural beauty, as we'd traveled here many times. We knew the weather would include actual seasons. We hoped that the government would prove to be less intrusive, more common-sensical, and it has. But we did not expect the people here to be so welcoming, despite our not being of the predominant faith here (LDS). We also didn't expect some other things, slightly subtler, but that turn out to be very important to us.

I'd say the biggest one, for me, is the general assumption of honesty here. In general, people just trust one another. If you tell a craftsman that you agree with their price, they'll just proceed with the work and trust that you'll pay them. If you leave your wallet somewhere, you can trust that it will be held for you, contents intact. If you leave your car unlocked, you can trust that it will not be stolen. If you carry ten items to the cash register in a store and tell the clerk you have ten of them, she'll just ring you up for ten without even counting. And so on. I'm talking about out here in the countryside, where we live – the story is different in the cities, of course. Out here, though, people just assume that you're honest in your dealings. How refreshing, especially compared with our experiences in California!

Another slightly subtle surprise for us: we can depend on others around here for help, and they assume they can depend on us. I still get surprised by this quite often – people are all the time volunteering to help out, and they really mean it.

And some of our neighbors have become good friends, despite the vast differences in our backgrounds and experiences.

We're very grateful for the wonderful home that Utah has made for us.

And when we read things about California, like this or this, we're even more grateful for Utah providing us with refuge!

It was all downhill from there. By the time we retired in 2013, it was obvious to Debbie and I that our long-planned retirement in the mountains of San Diego wasn't going to be the dream we'd thought it would be. We made up our minds to leave, and we chose Utah for many reasons.

It turns out, though, that we'd underestimated (by a lot!) just how well we'd fit in here in Utah. We'd expected the natural beauty, as we'd traveled here many times. We knew the weather would include actual seasons. We hoped that the government would prove to be less intrusive, more common-sensical, and it has. But we did not expect the people here to be so welcoming, despite our not being of the predominant faith here (LDS). We also didn't expect some other things, slightly subtler, but that turn out to be very important to us.

I'd say the biggest one, for me, is the general assumption of honesty here. In general, people just trust one another. If you tell a craftsman that you agree with their price, they'll just proceed with the work and trust that you'll pay them. If you leave your wallet somewhere, you can trust that it will be held for you, contents intact. If you leave your car unlocked, you can trust that it will not be stolen. If you carry ten items to the cash register in a store and tell the clerk you have ten of them, she'll just ring you up for ten without even counting. And so on. I'm talking about out here in the countryside, where we live – the story is different in the cities, of course. Out here, though, people just assume that you're honest in your dealings. How refreshing, especially compared with our experiences in California!

Another slightly subtle surprise for us: we can depend on others around here for help, and they assume they can depend on us. I still get surprised by this quite often – people are all the time volunteering to help out, and they really mean it.

And some of our neighbors have become good friends, despite the vast differences in our backgrounds and experiences.

We're very grateful for the wonderful home that Utah has made for us.

And when we read things about California, like this or this, we're even more grateful for Utah providing us with refuge!

Friday, April 28, 2017

Paradise ponders: snow and router table edition...

Paradise ponders: snow and router table edition... The router table is now complete except for the actual router and it's lifter (they're supposed to arrive today). The rest of the kit went together just as perfectly as the first third. The only challenging part turned out to be putting that cast iron top on. That thing was heavy (I'm guessing about 70 pounds), and the bolts that attach it to the stand go up through the bottom of it. That was a real booger to get on, because the top's mounting holes had to be precisely aligned with the corresponding holes in the stand, and because I couldn't see a darned thing inside the cabinet (no space to get my fat head in there). The first photo below shows the wood chip box that's supposed to make this router table nearly dust and chip free. We'll see how well that works!

Our weather today is really hard to believe for being nearly May. We've had rain, hail, sleet, and snow today, all day long. Right now it's raining. It's about 35°F outside, so the snow and sleet aren't sticking very long, thankfully. Our trees (at right) had some snow on their leaves, though. But once again, it's wet as heck outside – there's mud everywhere. Dang.

Our weather today is really hard to believe for being nearly May. We've had rain, hail, sleet, and snow today, all day long. Right now it's raining. It's about 35°F outside, so the snow and sleet aren't sticking very long, thankfully. Our trees (at right) had some snow on their leaves, though. But once again, it's wet as heck outside – there's mud everywhere. Dang.

AI and deep learning...

AI and deep learning... My long time readers will know that I am very skeptical of artificial intelligence (AI) in general. By that I mean two separate things these days, as the field of AI has split into two rather different things the past few years.

The first (and oldest) meaning was the general idea of making computers “intelligent” in generally the same way as humans are intelligent. Isaac Asimov's I, Robot stories and their derivatives perfectly illustrated this idea. The progress researchers have made on this sort of AI is roughly the same as the progress they've made on faster-than-light space travel: nil. I am skeptical that they ever will, as everything I know about computers and humans (more about the former than the latter!) tells me that they don't work the same way at all.

The second more recent kind of AI is generally known by the moniker “deep learning”. I think that term is a bit misleading, but never mind that. For me the most interesting thing about deep learning is that nobody knows how any system using deep learning actually works. Yes, really! In this sense, deep learning systems are a bit like people. An example: suppose spent a few days learning how to do something new – say, candling eggs. You know what the process of learning is like, and you know that at the end you will be competent to candle eggs. But you have utterly no idea how your brain is giving you this skill. Despite this, AI researchers have made enormous progress with deep learning. Why? Relative to other kinds of AI, it's easy to build. It's enabled by powerful processors, and we're getting really good at building those. And, probably most importantly, there are a large number of relatively simple tasks that are amenable to deep learning solutions.

Deep learning systems (which are programs, sometimes running on special computers) we know how to train, but we don't know how the result works, just like with people. You go through a process to train them how to do some specific task, and then (if you've done it right) they know how to do it. What's fascinating to me as a programmer is that no programming was involved in teaching the system how to do its task – just training of a general purpose deep learning system. And there's a consequence to that: no programmer (or anyone else) knows how that deep learning system actually does its work. There's not even any way to figure that out.

There are a couple of things about that approach that worry me.

First, there's the problem of how that deep learning system will react to new inputs. There's no way to predict that. My car, a Tesla Model X, is a great example of such a deep learning system. It uses machine vision (a video camera coupled with a deep learning system) to analyze the road ahead and decide how to steer the car. In my own experience, it works very well when the road is well-defined by painted lines, pavement color changes, etc. It works much less well otherwise. For instance, not long ago I had it in “auto-steer” on a twisty mountain road whose edges petered off into gravel. To my human perception, the road was still perfectly clear – but to the Tesla it was not. Auto-steer tried at one point to send me straight into a boulder! :) I'd be willing to bet you that at no time in the training of its deep learning system was it ever presented with a road like the one I was on that day, and therefore it really didn't know what it was seeing (or, therefore, how to steer). The deep learning method is very powerful, but it's still missing something that human brains are adding to that equation. I suspect it's related to the fact that the deep learning system doesn't have a good geometrical model of the world (as we humans most certainly do), which is the subject of the next paragraph.

Second, there's the problem of insufficiency, alluded to above. Deep learning isn't the only thing necessary to emulate human intelligence. It likely is part of the overall human intelligence emulation problem, but it's far from the whole thing. This morning I ran across a blog post talking about the same issue, in this case with respect to machine vision and deep learning. It's written by a programmer who works on these systems, so he's better equipped to make the argument than I am.

I think AI has a very long way to go before Isaac Asimov would recognize it, and I don't see any indications that the breakthroughs needed are imminent...

The first (and oldest) meaning was the general idea of making computers “intelligent” in generally the same way as humans are intelligent. Isaac Asimov's I, Robot stories and their derivatives perfectly illustrated this idea. The progress researchers have made on this sort of AI is roughly the same as the progress they've made on faster-than-light space travel: nil. I am skeptical that they ever will, as everything I know about computers and humans (more about the former than the latter!) tells me that they don't work the same way at all.

The second more recent kind of AI is generally known by the moniker “deep learning”. I think that term is a bit misleading, but never mind that. For me the most interesting thing about deep learning is that nobody knows how any system using deep learning actually works. Yes, really! In this sense, deep learning systems are a bit like people. An example: suppose spent a few days learning how to do something new – say, candling eggs. You know what the process of learning is like, and you know that at the end you will be competent to candle eggs. But you have utterly no idea how your brain is giving you this skill. Despite this, AI researchers have made enormous progress with deep learning. Why? Relative to other kinds of AI, it's easy to build. It's enabled by powerful processors, and we're getting really good at building those. And, probably most importantly, there are a large number of relatively simple tasks that are amenable to deep learning solutions.

Deep learning systems (which are programs, sometimes running on special computers) we know how to train, but we don't know how the result works, just like with people. You go through a process to train them how to do some specific task, and then (if you've done it right) they know how to do it. What's fascinating to me as a programmer is that no programming was involved in teaching the system how to do its task – just training of a general purpose deep learning system. And there's a consequence to that: no programmer (or anyone else) knows how that deep learning system actually does its work. There's not even any way to figure that out.

There are a couple of things about that approach that worry me.

First, there's the problem of how that deep learning system will react to new inputs. There's no way to predict that. My car, a Tesla Model X, is a great example of such a deep learning system. It uses machine vision (a video camera coupled with a deep learning system) to analyze the road ahead and decide how to steer the car. In my own experience, it works very well when the road is well-defined by painted lines, pavement color changes, etc. It works much less well otherwise. For instance, not long ago I had it in “auto-steer” on a twisty mountain road whose edges petered off into gravel. To my human perception, the road was still perfectly clear – but to the Tesla it was not. Auto-steer tried at one point to send me straight into a boulder! :) I'd be willing to bet you that at no time in the training of its deep learning system was it ever presented with a road like the one I was on that day, and therefore it really didn't know what it was seeing (or, therefore, how to steer). The deep learning method is very powerful, but it's still missing something that human brains are adding to that equation. I suspect it's related to the fact that the deep learning system doesn't have a good geometrical model of the world (as we humans most certainly do), which is the subject of the next paragraph.

Second, there's the problem of insufficiency, alluded to above. Deep learning isn't the only thing necessary to emulate human intelligence. It likely is part of the overall human intelligence emulation problem, but it's far from the whole thing. This morning I ran across a blog post talking about the same issue, in this case with respect to machine vision and deep learning. It's written by a programmer who works on these systems, so he's better equipped to make the argument than I am.

I think AI has a very long way to go before Isaac Asimov would recognize it, and I don't see any indications that the breakthroughs needed are imminent...

Paradise ponders: of ovens and arduous kits...

Paradise ponders: of ovens and arduous kits... Yesterday morning we finally got our new oven (Bosch photo at right) delivered and installed. We were supposed to get it this past Monday, but after looking at it Darrell realized he was going to need some help – the beast weighs 180 pounds! Darrell (the owner of Darrell's Appliances), with Brian to help him, had it installed in less than an hour after arriving. I had already removed our old oven and wired an electrical box, which saved them some work. However, there was a challenge for them: the opening in our cabinetry was 3/8" too narrow. Darrell set up some tape as a guide, whipped out his Makita jig saw, and had that problem fixed in a jiffy. There was a bit of a struggle to get it off the floor and into the hole, but once they did everything else went smoothly. It seems to work fine. The controls are a breeze to use. We haven't cooked anything yet, but I'm sure Debbie will pop something in there soon! :) One big surprise for us: it comes with a meat thermometer that plugs into a jack inside the oven. How very convenient!

Yesterday I started building the new router table I bought (Rockler photo at right). When I took delivery of the table, I thought there had been some kind of mistake: it came in two flat boxes, just a couple inches thick and not all that big. No mistake, though – it's just a serious kit. By that I mean that what you get is some pieces of sheet metal, cut, drilled, bent, and threaded as needed, along with a bag of nuts and bolts. A big bag of nuts and bolts! The packing was ingenious – a whole lot of metal pieces packed into a very small volume. I was a bit dismayed upon seeing the kit after unpacking it, as my general experience in assembling kits made mostly of sheet metal is ... pretty bad. Things usually don't fit right, and I end up using pliers, hacksaws, rubber mallets, and nibblers to get everything to fit. Often I have to drill and thread my own holes. Worse, usually the edges of the sheet metal are as sharp as a razor blade and I end up looking like the losing side of a knife fight.

I'm about one third of the way through the assembly, and so far I am very pleasantly surprised. With just a single exception, every part has fit precisely correctly, first try. Better yet, the edges of the sheet metal don't seem quite so sharp – no cuts yet (and no bloodstains on the goods!). The one exception was a minor one: I needed one gentle tap of the rubber mallet to get a recalcitrant threaded hole to line up with a drilled hole. The directions are crystal clear, with great illustrations. I hope the rest of the assembly is just as nice!

Yesterday I started building the new router table I bought (Rockler photo at right). When I took delivery of the table, I thought there had been some kind of mistake: it came in two flat boxes, just a couple inches thick and not all that big. No mistake, though – it's just a serious kit. By that I mean that what you get is some pieces of sheet metal, cut, drilled, bent, and threaded as needed, along with a bag of nuts and bolts. A big bag of nuts and bolts! The packing was ingenious – a whole lot of metal pieces packed into a very small volume. I was a bit dismayed upon seeing the kit after unpacking it, as my general experience in assembling kits made mostly of sheet metal is ... pretty bad. Things usually don't fit right, and I end up using pliers, hacksaws, rubber mallets, and nibblers to get everything to fit. Often I have to drill and thread my own holes. Worse, usually the edges of the sheet metal are as sharp as a razor blade and I end up looking like the losing side of a knife fight.

I'm about one third of the way through the assembly, and so far I am very pleasantly surprised. With just a single exception, every part has fit precisely correctly, first try. Better yet, the edges of the sheet metal don't seem quite so sharp – no cuts yet (and no bloodstains on the goods!). The one exception was a minor one: I needed one gentle tap of the rubber mallet to get a recalcitrant threaded hole to line up with a drilled hole. The directions are crystal clear, with great illustrations. I hope the rest of the assembly is just as nice!

Thursday, April 27, 2017

NASA at...

NASA at ... its most awesome, and at its least awesome... Too bad there's so much more of the latter than the former...

Paradise ponders: artichokes, kits, better indexes, and electricity edition...

Paradise ponders: artichokes, kits, better indexes, and electricity edition... Yesterday Debbie made a new dish – a casserole of chicken, artichokes, onions, Parmesan cheese, and mayonnaise. That's her serving at right, over rice. This one is a keeper, and I was a bit surprised just how tasty those artichokes were. They were canned, in water, not the marinated kind I'm familiar with. The water was salted, so we leached as much as possible out by soaking them in fresh water, replaced several times, and that seemed to work very well. A keeper recipe for sure! Both of us thought of several modifications we could make to it that might be even better. I sense some artichokely experimentation in our future...

Over the past few days some tools I've ordered arrived: a couple of jigs (for making box joints and mortise-and-tenon joints) and a router table. All three of these are kits – boxes of parts with bags of nuts and bolts. Big bags of nuts and bolts. I'm gonna be putting things together for a while before I can use them. All three of these were long-planned, but I need them now to let me build some stuff for the cattery. Somehow I didn't expect the jigs to be kits, but the fact that the router table is a kit doesn't really surprise me...

A couple days ago I posted about an index to look up the value of the largest decimal digit that is smaller than a given binary value. The code that I posted was ... not optimal. Here's a better attempt:

I'm going to be putting up a little shed soon, to house some components of our sprinkler system. I had my favorite electrician (Tyler, from Golden Spike Electric) out yesterday evening to check out the job. It's such a pleasure to work with people like him! I want something kind of weird (naturally!), and he had absolutely no problem accommodating me. I'm going to have him run a 50 amp 220 volt circuit to a subpanel in the new shed, and I'm going to wire it from there. “No problem”, says he – and we looked over the existing panel that he'd connect to. There was a circuit installed long ago that ran to an external plug for an RV. This is not something we'll ever need, so we're going to remove that and steal the existing breaker (which happens to be 50 amp!) for my new subpanel. Easy peasy!

Over the past few days some tools I've ordered arrived: a couple of jigs (for making box joints and mortise-and-tenon joints) and a router table. All three of these are kits – boxes of parts with bags of nuts and bolts. Big bags of nuts and bolts. I'm gonna be putting things together for a while before I can use them. All three of these were long-planned, but I need them now to let me build some stuff for the cattery. Somehow I didn't expect the jigs to be kits, but the fact that the router table is a kit doesn't really surprise me...

A couple days ago I posted about an index to look up the value of the largest decimal digit that is smaller than a given binary value. The code that I posted was ... not optimal. Here's a better attempt:

In addition to a better algorithm, I also made the code easier to read. The compiler optimizes the local variables away, so there's no performance impact to this. The 9 bit index generated is pretty simple. If 2^k is the most significant bit in _val, then the upper six bits of the index are k, and the lower three bits are the bits in _val at 2^k-1, 2^k-2, and 2^k-3 (with a bit of special casing for k < 3).int lz = Long.numberOfLeadingZeros( _val ); int mag = 63 - lz; int hi = mag << 3; long sec = Long.highestOneBit( _val ) ^ _val; int lo = (int)(sec >>> Math.max(0, mag - 3)); int index = hi | lo;

I'm going to be putting up a little shed soon, to house some components of our sprinkler system. I had my favorite electrician (Tyler, from Golden Spike Electric) out yesterday evening to check out the job. It's such a pleasure to work with people like him! I want something kind of weird (naturally!), and he had absolutely no problem accommodating me. I'm going to have him run a 50 amp 220 volt circuit to a subpanel in the new shed, and I'm going to wire it from there. “No problem”, says he – and we looked over the existing panel that he'd connect to. There was a circuit installed long ago that ran to an external plug for an RV. This is not something we'll ever need, so we're going to remove that and steal the existing breaker (which happens to be 50 amp!) for my new subpanel. Easy peasy!

Wednesday, April 26, 2017

Paradise ponders: Debbie's “graduation”, filling station milestone, steam train, and tax cuts...

Paradise ponders: Debbie's “graduation”, filling station milestone, steam train, and tax cuts... This morning Debbie went in to see her surgeon for a two week post-surgery followup. He pronounced her recovery to be great, ready to get her staples taken out, and cleared for any physical activity that didn't cause her pain. She looked so good, in fact, that he declared it unnecessary for her to have the usual subsequent followup visits. This prompted a nurse who was in the room to comment that Debbie had “graduated”. Yay! The staples came out (something that Debbie really hates – she crushed my hand and buried her head in my shoulder while the nurse did this), and now her big job is to see how far she can push herself with exercise before it gets painful. First milestone there will be walking without any walker, crutches, or cane. The surgeon said she could be doing this just as soon as she can comfortably put full weight on her left knee.

Yesterday the guys were here working on our filling station. They got all the pipe cut, threaded, and installed – the tanks are now actually connected to the nozzles! I just put in a call to my fuel supplier, and within a few days I should actually have some gasoline and diesel fuel sloshing around in those tanks. There's still more work to do on the filling station, but we can use it while that work gets done. I've just ordered the steel door to go in the front of it; that should be here in six weeks or so. I've also got Randy Bingham (the mason who did our fireplace) lined up to put rock on top and all around it, but it might be a while before that gets done. Progress!

Yesterday we'd planned to go see a steam locomotive that was scheduled to stop for just a half hour in Cache Valley. The place where it was to stop was the teensy little town of Cache Junction, just south of my brother Scott's home. This town has about four houses, and it's kind of in the boonies of Cache Valley – so we weren't expecting many people to show up for it. Man, were we wrong! There were a couple hundred cars parked there, overflowing the little parking area to line the sides of the roads for a half mile or so on both sides of town. I'd guess that around a thousand people were milling all about. In that environment there was no way for us to park close by for a view of the train, and Debbie's not ready to walk over rough ground, so we just drove through town and marveled at the size of the crowd. We visited for a little while with my brother (where we picked up some beautiful logs he'd saved for me) and on the way out we did get to see the locomotive as it approached Cache Junction. We crossed the tracks about 2 miles from Cache Junction and were amused to see that a crowd had gathered there as well. This steam locomotive was darned popular here!

As I started writing this post, news is trickling in about Trump's proposed tax cut. I have no idea what the chances are that this will actually get through Congress intact, but I'm skeptical given the magnitude of the cuts and the general hostility of the Democrats. But one aspect of the proposed plan jumped out at me: repealing the federal income tax deduction for state and local taxes. This deduction is, in effect, a federal subsidy that disproportionately benefits residents of high tax states. I'd be delighted to see that actually be repealed, but it's easy to foresee that the Democrats will start crazed howling about this one. That's so obvious that I have to wonder if it was included in the proposal mainly as a sop whose removal could be traded for Democratic votes in the Senate...

Yesterday the guys were here working on our filling station. They got all the pipe cut, threaded, and installed – the tanks are now actually connected to the nozzles! I just put in a call to my fuel supplier, and within a few days I should actually have some gasoline and diesel fuel sloshing around in those tanks. There's still more work to do on the filling station, but we can use it while that work gets done. I've just ordered the steel door to go in the front of it; that should be here in six weeks or so. I've also got Randy Bingham (the mason who did our fireplace) lined up to put rock on top and all around it, but it might be a while before that gets done. Progress!

Yesterday we'd planned to go see a steam locomotive that was scheduled to stop for just a half hour in Cache Valley. The place where it was to stop was the teensy little town of Cache Junction, just south of my brother Scott's home. This town has about four houses, and it's kind of in the boonies of Cache Valley – so we weren't expecting many people to show up for it. Man, were we wrong! There were a couple hundred cars parked there, overflowing the little parking area to line the sides of the roads for a half mile or so on both sides of town. I'd guess that around a thousand people were milling all about. In that environment there was no way for us to park close by for a view of the train, and Debbie's not ready to walk over rough ground, so we just drove through town and marveled at the size of the crowd. We visited for a little while with my brother (where we picked up some beautiful logs he'd saved for me) and on the way out we did get to see the locomotive as it approached Cache Junction. We crossed the tracks about 2 miles from Cache Junction and were amused to see that a crowd had gathered there as well. This steam locomotive was darned popular here!

As I started writing this post, news is trickling in about Trump's proposed tax cut. I have no idea what the chances are that this will actually get through Congress intact, but I'm skeptical given the magnitude of the cuts and the general hostility of the Democrats. But one aspect of the proposed plan jumped out at me: repealing the federal income tax deduction for state and local taxes. This deduction is, in effect, a federal subsidy that disproportionately benefits residents of high tax states. I'd be delighted to see that actually be repealed, but it's easy to foresee that the Democrats will start crazed howling about this one. That's so obvious that I have to wonder if it was included in the proposal mainly as a sop whose removal could be traded for Democratic votes in the Senate...

Tuesday, April 25, 2017

Binary to decimal conversion performance...

Binary to decimal conversion performance... Now here's an obscure topic that I've worked on a great many times over my career. The original inspiration for me to work on this came from programming the Z-80, way back in the '70s. That little 8 bit microprocessor could do 16 bit addition, but it had no multiply or divide instructions at all. Often the application I was working on needed higher precision math (usually 32 bits), so I had a library of assembly language subroutines that could do the basic four 32 bit operations: add, subtract, multiply, and divided. Multiply and divide were maddeningly slow. Eventually I came up with a way to speed up multiply considerably, but divide was intractably slow.

This slow divide performance was on full display anytime I wanted to convert a 32 bit binary integer to its decimal equivalent. The canonical algorithm uses repeated division-with-remainder by ten. For instance, to convert the binary number 11001001 to its decimal equivalent, you would do this:

What I came up with has been independently invented by many people; I wasn't the first, I'm sure, and I don't know who was. The basic technique depends on a simple observation: there aren't all that many possible decimal digits whose value fits in a given length binary number. For instance, in a signed 32 bit binary number, the largest positive decimal number that can be represented is 2,147,483,647. That's 10 decimal digits, any of which can have the non-zero values [1..9], except the most significant one can only be [1..2]. That's just 83 values. If you pre-compute all those 83 values and put them in a table, then you can convert a binary number to decimal with this algorithm:

That was vital to getting decent performance out of that Z-80. But what about a modern processor? Well, it turns out that in relative terms, division is still the red-haired stepchild of CPU performance. On a modern Intel or ARM processor, 64 bit integer division can take over 100 CPU clock cycles. Multiply operations, by comparison, take just a handful of clock cycles, while addition and subtraction are generally just 1 or 2 clock cycles. Even when using a high-level language like Java, algorithms that avoid using division repetitively can still produce large performance gains. I've done this five or six times now, most recently to get a 6:1 performance gain when converting floating point values to XML.

One line of the above algorithm I sort of glossed over: the bit about finding the largest value of a decimal digit that is less than the remaining value. The naive way to do this would be to use a linear search, or possibly a binary search of the table of decimal digit values. This would require a number of iterations over the search algorithm, at best an average of 4 iterations for a binary search over a table big enough for 32 bit values. For 64 bit values we'd have 171 decimal digit values, and we'd need 5 iterations for that binary search.

We can do better, though, by taking advantage of the fact that there's a close relationship between the binary values and the largest fitting decimal digit. I just finished coding up a nice solution for finding the largest decimal digit that fits into a signed 64 bit integer. It works by using a table with 512 entries, about three times as many as we have possible decimal digit values. Given a 64 bit positive binary number, the Java code that computes the index into that table is:

With those two techniques in combination, I'm doing conversions from binary to decimal with no divisions and no iterative searching. It's very challenging to benchmark performance in Java, but my simple testing shows about 5:1 improvement in performance for random numbers in the 64 bit positive integer range. Smaller numbers (with fewer decimal digits) show smaller performance gains, but always at least 2:1. I'll call that a win!

This slow divide performance was on full display anytime I wanted to convert a 32 bit binary integer to its decimal equivalent. The canonical algorithm uses repeated division-with-remainder by ten. For instance, to convert the binary number 11001001 to its decimal equivalent, you would do this:

11001001 / 1010 = 10100 remainder 1The result is then the remainders, in decimal, in reverse order: 201. You need one division operation for each digit of the result. A 32 bit number might have as many as ten digits, and on that low-horsepower little Z-80, those divisions were really expensive. So I worked hard to come up with an alternative means of conversion that didn't require division.

10100 / 1010 = 10 remainder 0

10 / 1010 = 0 remainder 10

What I came up with has been independently invented by many people; I wasn't the first, I'm sure, and I don't know who was. The basic technique depends on a simple observation: there aren't all that many possible decimal digits whose value fits in a given length binary number. For instance, in a signed 32 bit binary number, the largest positive decimal number that can be represented is 2,147,483,647. That's 10 decimal digits, any of which can have the non-zero values [1..9], except the most significant one can only be [1..2]. That's just 83 values. If you pre-compute all those 83 values and put them in a table, then you can convert a binary number to decimal with this algorithm:

start with the binary value to be convertedThis produces the decimal digits in order, from the most significant digit to the least significant digit. Most importantly, it does so with no division required.

while remaining value is nonzero

find the largest value of a decimal digit < the remaining value

record the digit

subtract that decimal digit value from the remaining value

That was vital to getting decent performance out of that Z-80. But what about a modern processor? Well, it turns out that in relative terms, division is still the red-haired stepchild of CPU performance. On a modern Intel or ARM processor, 64 bit integer division can take over 100 CPU clock cycles. Multiply operations, by comparison, take just a handful of clock cycles, while addition and subtraction are generally just 1 or 2 clock cycles. Even when using a high-level language like Java, algorithms that avoid using division repetitively can still produce large performance gains. I've done this five or six times now, most recently to get a 6:1 performance gain when converting floating point values to XML.

One line of the above algorithm I sort of glossed over: the bit about finding the largest value of a decimal digit that is less than the remaining value. The naive way to do this would be to use a linear search, or possibly a binary search of the table of decimal digit values. This would require a number of iterations over the search algorithm, at best an average of 4 iterations for a binary search over a table big enough for 32 bit values. For 64 bit values we'd have 171 decimal digit values, and we'd need 5 iterations for that binary search.

We can do better, though, by taking advantage of the fact that there's a close relationship between the binary values and the largest fitting decimal digit. I just finished coding up a nice solution for finding the largest decimal digit that fits into a signed 64 bit integer. It works by using a table with 512 entries, about three times as many as we have possible decimal digit values. Given a 64 bit positive binary number, the Java code that computes the index into that table is:

Pretty simple code, really. It's also very fast. Best of all, with a properly constructed table the index will point to an entry that will either be the correct one (that is, the largest decimal digit that will fit into the value) or it will be too big. If it is too big, then the preceding entry in the table is guaranteed to be the correct one. Much better than the iterative searches!int lz = Long.numberOfLeadingZeros( value ); int index = ((63 - lz) << 3) + (0xf & (int)Long.rotateRight( value, 60 - lz));

With those two techniques in combination, I'm doing conversions from binary to decimal with no divisions and no iterative searching. It's very challenging to benchmark performance in Java, but my simple testing shows about 5:1 improvement in performance for random numbers in the 64 bit positive integer range. Smaller numbers (with fewer decimal digits) show smaller performance gains, but always at least 2:1. I'll call that a win!

Monday, April 24, 2017

Paradise ponders: rain, and strange coincidences edition...

Paradise ponders: rain, and strange coincidences edition... It is pouring on us right now (see the radar snapshot at right). I'm up in my barn office, and the noise of the rain hitting the steel roof over my head is deafening.

This morning I spoke for a little while with the attorney I'm using to handle a real estate transaction in Virginia. He mentioned that he was a little familiar with Utah, as he used to come out here for vacations, especially near Moab. So I shared a bit with him about our numerous trips there, and I mentioned that we knew the La Sal Mountains very well. He then related his happy visits to Pack Creek Ranch, a now-defunct resort on the western slopes of the La Sals. Debbie and I have very happy memories of that place, having stayed there several times ourselves. It's a little bitty resort, way out of the way, exactly our sort of place precisely because it's not very popular. And yet ... this lawyer from Virginia knew it, and had stayed there three or four times. What a bizarre coincidence!

This morning I spoke for a little while with the attorney I'm using to handle a real estate transaction in Virginia. He mentioned that he was a little familiar with Utah, as he used to come out here for vacations, especially near Moab. So I shared a bit with him about our numerous trips there, and I mentioned that we knew the La Sal Mountains very well. He then related his happy visits to Pack Creek Ranch, a now-defunct resort on the western slopes of the La Sals. Debbie and I have very happy memories of that place, having stayed there several times ourselves. It's a little bitty resort, way out of the way, exactly our sort of place precisely because it's not very popular. And yet ... this lawyer from Virginia knew it, and had stayed there three or four times. What a bizarre coincidence!

A step taken...

A step taken... This morning I registered a new domain: decinum.org. I was a bit surprised that it hadn't already been taken – my usual experience with finding domain names is that the first 1,000 or so that I try have already been snagged. :) Further, to my amazement, 60 seconds after registering decinum.org, the DNS resolution was already working. The first domain I ever registered (dilatush.com, back in '93) took several days to get that far.

This new registration is a first step for an open source project I'm going to start working on. The main purpose is to actually implement the observations and ideas I've had for representing money in Java. The vast majority of that work lies in representing high precision decimal numbers, hence the domain name. I won't have any web site for decinum.org at first; I locked down the name mainly so I could safely use it for Java class names...

This new registration is a first step for an open source project I'm going to start working on. The main purpose is to actually implement the observations and ideas I've had for representing money in Java. The vast majority of that work lies in representing high precision decimal numbers, hence the domain name. I won't have any web site for decinum.org at first; I locked down the name mainly so I could safely use it for Java class names...

Sunday, April 23, 2017

Paradise ponders, 8' lumber, oven removal, and scallops edition...

Paradise ponders, 8' lumber, oven removal, and scallops edition... Tomorrow we're supposed to get our new oven installed. You may remember that we gave up on our old oven after three failed attempts to get it repaired. The new one is a Bosch model that we're hoping we're just as happy with as we are with our Bosch dishwasher. Also, it came highly recommended by our repair technician.

So this morning I tackled what I thought was likely to be an all-day job: removing the old oven and installing an electrical junction box. The house's previous owner had done an awesomely shabby job of wiring the old oven: he just used wire nuts to join the wires, covering them with approximately eight miles of electrical tape. It's the sort of electrical job you might imagine a child doing. I ran into something similar when I replaced the microwave directly above this oven, and there I had room to install a standard outlet box. The new oven is 220V, and those outlets are way too big to install in the cramped space the oven must fit in. So I went with a hard-wired junction box.

Taking the oven out turned out to be relatively easy. I had the old oven out in our garage just a half hour after starting. With some creative use of our hand truck, it wasn't even all that difficult. The hardest part was getting it onto the hand truck – wrangling 185 lbs of oven by myself was a bit of a challenge! But I got it...

We made a run to Home Depot and got all the electrical parts I needed. Installing them took all of another half hour. So by 10 am I was completely done with that job. Yay!

So we made another run to Home Depot, this time to buy the stuff I needed to build out a couple of frames around two of our basement windows in our cattery. These are the windows that we've opened up to our sun room. I removed the glass sliding windows, including their frame, and now we just have the steel frame that's set into the basement's concrete wall. I'm building a frame out of cedar 1x8's with the boards perpendicular to the plane of the old window. This is at the suggestion of our mason, who will use that frame as one edge of the rock he's installing.

I needed to buy five 8' long pieces of cedar (1x8s), so I did some measuring to see if it would be possible to fit those in our Model X. To my surprise, the answer was yes! About six inches of the boards needed to extend over the center console, which I covered with a shipping pad to protect. The photo at right I took while standing behind the open rear hatch. You can see the “hole” between the two front seats where we stowed the boards, along with the pads I used to protect it. We keep getting surprised by what we can carry in the Model X – it's really quite roomy inside (especially our five seat version with the rear seats folded down).

On the way to Home Depot, I stopped for a few groceries at Smith's. That's not where we usually shop, so while I was in there I did a little scouting. On the way by the seafood counter I spotted scallops – the great, big sea scallops that Debbie uses in her scrumptious baked scallops recipe. I bought a pound and a half, and Debbie is cooking them as I write this. Feast today!

So this morning I tackled what I thought was likely to be an all-day job: removing the old oven and installing an electrical junction box. The house's previous owner had done an awesomely shabby job of wiring the old oven: he just used wire nuts to join the wires, covering them with approximately eight miles of electrical tape. It's the sort of electrical job you might imagine a child doing. I ran into something similar when I replaced the microwave directly above this oven, and there I had room to install a standard outlet box. The new oven is 220V, and those outlets are way too big to install in the cramped space the oven must fit in. So I went with a hard-wired junction box.

Taking the oven out turned out to be relatively easy. I had the old oven out in our garage just a half hour after starting. With some creative use of our hand truck, it wasn't even all that difficult. The hardest part was getting it onto the hand truck – wrangling 185 lbs of oven by myself was a bit of a challenge! But I got it...

We made a run to Home Depot and got all the electrical parts I needed. Installing them took all of another half hour. So by 10 am I was completely done with that job. Yay!

So we made another run to Home Depot, this time to buy the stuff I needed to build out a couple of frames around two of our basement windows in our cattery. These are the windows that we've opened up to our sun room. I removed the glass sliding windows, including their frame, and now we just have the steel frame that's set into the basement's concrete wall. I'm building a frame out of cedar 1x8's with the boards perpendicular to the plane of the old window. This is at the suggestion of our mason, who will use that frame as one edge of the rock he's installing.

I needed to buy five 8' long pieces of cedar (1x8s), so I did some measuring to see if it would be possible to fit those in our Model X. To my surprise, the answer was yes! About six inches of the boards needed to extend over the center console, which I covered with a shipping pad to protect. The photo at right I took while standing behind the open rear hatch. You can see the “hole” between the two front seats where we stowed the boards, along with the pads I used to protect it. We keep getting surprised by what we can carry in the Model X – it's really quite roomy inside (especially our five seat version with the rear seats folded down).

On the way to Home Depot, I stopped for a few groceries at Smith's. That's not where we usually shop, so while I was in there I did a little scouting. On the way by the seafood counter I spotted scallops – the great, big sea scallops that Debbie uses in her scrumptious baked scallops recipe. I bought a pound and a half, and Debbie is cooking them as I write this. Feast today!

Saturday, April 22, 2017

Jaw-dropping innumeracy test...

Jaw-dropping innumeracy test... I ran across this online somewhere (can't find it now), read their results and suspected shenanigans. I was wrong. In fact, my own tests suggest the innumeracy situation might even be worse than the report I read.

Here's the test:

So I've tried the test now on five people (a ludicrously small sample, I know), more or less randomly selected. None of them were even remotely close to the right answer. The most common place for the third dot was somewhere near the middle of the line. One person put it about 1/5th of the line's length from the right! I'm slightly comforted by the fact that none of these people were scientists or engineers ... but only slightly comforted.

I find this absolutely stunning. Somehow it never occurred to me that so many adults – probably a majority of adults – wouldn't intuitively grasp such basic concepts. That level of innumeracy means that things like discussions of government budgets are beyond their ken, as they don't understand the significance of the difference between millions, billions, or trillions of dollars (and probably not thousands, either!). Or basic astronomical concepts. Sheesh, much of science involves numbers covering multiple orders of magnitude.

Gobsmacked, I am...

Here's the test:

Take a piece of blank paper. Draw a dot on the left and mark it “0”. Draw a dot opposite the first one, on the right, and mark it “1,000,000,000” (one billion). Draw a line between the two dots. That's a number line, like many of us learned in school. It represents all the numbers between zero and a billion, with the integers equally spaced. Now draw a third dot on the line where you think the number 1,000,000 (one million) belongs.The report I read said that about half of people trying this test put the third dot in roughly the correct place; everyone else got it wrong. That's the result I was skeptical of. The correct place, of course, is 1/1000th of the line's length from the left. So if your line was 10 inches long, the third dot would be a mere 1/100th of an inch from the dot for zero.

So I've tried the test now on five people (a ludicrously small sample, I know), more or less randomly selected. None of them were even remotely close to the right answer. The most common place for the third dot was somewhere near the middle of the line. One person put it about 1/5th of the line's length from the right! I'm slightly comforted by the fact that none of these people were scientists or engineers ... but only slightly comforted.

I find this absolutely stunning. Somehow it never occurred to me that so many adults – probably a majority of adults – wouldn't intuitively grasp such basic concepts. That level of innumeracy means that things like discussions of government budgets are beyond their ken, as they don't understand the significance of the difference between millions, billions, or trillions of dollars (and probably not thousands, either!). Or basic astronomical concepts. Sheesh, much of science involves numbers covering multiple orders of magnitude.

Gobsmacked, I am...

Paradise ponders, brief “I’m busy!” edition...

Paradise ponders, brief “I’m busy!” edition... Yesterday none of our workers showed up, and no progress was made on any project. I hate days like that!

I squired Debbie down to Ogden for her hair appointment, which took 3.5 hours. She does this every five weeks, so that works out to roughly 40 hours a year. Me, I get a haircut every 5 or 6 months, whether I need it or not. :) My haircuts generally take less than 15 minutes. My haircuts cost $15, including a 50% tip (which the ladies who cut my hair are very happy with!). Debbie's cost ... you don't want to know, especially if you include the cost of the chemical soup she buys to maintain it. Now, I like her hair, mind you. I'm just noting the differences here, and pondering the magnitude of the investment of time and treasure. I cannot even imagine doing this myself...

When we were done with her hair appointment, we tried out a new restaurant in Ogden: Rosa's Cafe. We found it through Yelp. Debbie ordered a smothered pork burrito, and I had a chili relleno. Both were outstanding – they had a home-cooked vibe, much like our beloved Los Primos. The portions were gigantic, especially that burrito. Even better, the staff (allegedly a family) were just as friendly as the folks at Los Primos. We knew it was going to be good when we first opened the door. Debbie stood there for a moment, trying to figure out how to negotiate the small step up into the restaurant. An ample young man who works there stepped out to help with a big smile, and simply lifted her right up. Problem solved! An older man guessed that this was our first time, and advised us on what to get based on our tolerance for spiciness. We placed our order, grabbed some mango Jarritos from the fridge (again, just like Los Primos), and sat down to wait. Less than five minutes later, our food was in front of us. Mine came with rice and refries, and both of those were also outstanding – as was the giant, thick flour tortilla that came with the meal. That tortilla was obviously homemade for those burritos. The beans are made there, and man can you tell. The rice is something that most Mexican restaurants don't do well, with the rice typically grossly overcooked. Not here. The rice was perfect, the sauce beautifully done, and just the right amount of corn, carrots, etc. You can probably guess that we'll be going back. :)

Why am I busy today? I'm catching up on two weeks of neglected financial stuff. It's been accumulating in a pile on my desk, and today I shall demolish that pile...

I squired Debbie down to Ogden for her hair appointment, which took 3.5 hours. She does this every five weeks, so that works out to roughly 40 hours a year. Me, I get a haircut every 5 or 6 months, whether I need it or not. :) My haircuts generally take less than 15 minutes. My haircuts cost $15, including a 50% tip (which the ladies who cut my hair are very happy with!). Debbie's cost ... you don't want to know, especially if you include the cost of the chemical soup she buys to maintain it. Now, I like her hair, mind you. I'm just noting the differences here, and pondering the magnitude of the investment of time and treasure. I cannot even imagine doing this myself...

When we were done with her hair appointment, we tried out a new restaurant in Ogden: Rosa's Cafe. We found it through Yelp. Debbie ordered a smothered pork burrito, and I had a chili relleno. Both were outstanding – they had a home-cooked vibe, much like our beloved Los Primos. The portions were gigantic, especially that burrito. Even better, the staff (allegedly a family) were just as friendly as the folks at Los Primos. We knew it was going to be good when we first opened the door. Debbie stood there for a moment, trying to figure out how to negotiate the small step up into the restaurant. An ample young man who works there stepped out to help with a big smile, and simply lifted her right up. Problem solved! An older man guessed that this was our first time, and advised us on what to get based on our tolerance for spiciness. We placed our order, grabbed some mango Jarritos from the fridge (again, just like Los Primos), and sat down to wait. Less than five minutes later, our food was in front of us. Mine came with rice and refries, and both of those were also outstanding – as was the giant, thick flour tortilla that came with the meal. That tortilla was obviously homemade for those burritos. The beans are made there, and man can you tell. The rice is something that most Mexican restaurants don't do well, with the rice typically grossly overcooked. Not here. The rice was perfect, the sauce beautifully done, and just the right amount of corn, carrots, etc. You can probably guess that we'll be going back. :)

Why am I busy today? I'm catching up on two weeks of neglected financial stuff. It's been accumulating in a pile on my desk, and today I shall demolish that pile...

Friday, April 21, 2017

Paradise ponders, filling stations, muddy dogs, and wet weather ahead edition...

Paradise ponders, filling stations, muddy dogs, and wet weather ahead edition... Well, the guys were here on Wednesday working on our filling station, and now the interior plumbing is ever-so-close to being finished. All that's missing now is a piece of channel steel to hold the upper pipes firmly in place no matter how hard we tug on the hoses, and a wooden assembly (which I'll build) to hold the nozzles. On the other end of the plumbing, they have yet to connect the pipes to the tanks; I'm expecting that to happen today. Yesterday I took all the measurements of the front opening, drew up a diagram, and sent it off to Lazy K Wrought Iron to get a quote for them to make us a door. The day before I sent off the specifications for a railing on our sun room steps, and the stanchions for our deck railing. We're going to keep those folks busy for a while!

Thursday we got most of an inch of rain, mainly in the morning. That means our property is muddy, even where there's grass, as the ground was already completely saturated from past rains. The dogs this morning provided the proof (as if we needed any): when I let them back in after their morning outing, they were all little mud-balls. Now our forecast (click left to embiggen it) shows an entire week with good chances of precipitation. If you add all those days up, that's well over an inch of additional rain coming. Man, we're going to be wet! All that precipitation will fall on saturated ground, both here in the valley and up in the mountains. That means our streams will fill rapidly each time there's rain – not quite the flash floods we saw in California's deserts, but fast enough you could observe it over the course of just a few minutes. Our reservoirs are all chock-full and already overflowing prodigiously, so if these rains actually materialize I suspect we'll be in for some more flooding.

Next Tuesday a working steam locomotive will be stopping for a half hour in Cache Junction. That's just a short distance south of my brother's cabin, about a half hour's drive from our home. I'm hoping that Debbie and I can make it up there to see it! Here's more about the No. 844 locomotive, and its 2017 schedule.

It's now 10 days since Debbie had the hardware removed from her knee, and she's recovering nicely. There was a fairly big incision, and her healing process this time is all about that. She can already put most of her weight on her left knee (the one that was operated on). That incision was closed with staples, and they come out next Wednesday. Once that happens, Debbie should be unleashed on the physical therapy process. So far as we know now, she'll be doing that entirely on her own. We're going to ask next week whether she should have a professional physical therapist work with her on it...

And speaking of Debbie... She can't quite drive on her own yet, as she needs a bit of help getting in and out of the car. But she has a hair appointment today, down in Ogden. So I will be playing chauffeur for part of the day, to get her down there and back. :) We'll most likely eat out somewhere while we're down there – wouldn't want to miss the opportunity!

Thursday we got most of an inch of rain, mainly in the morning. That means our property is muddy, even where there's grass, as the ground was already completely saturated from past rains. The dogs this morning provided the proof (as if we needed any): when I let them back in after their morning outing, they were all little mud-balls. Now our forecast (click left to embiggen it) shows an entire week with good chances of precipitation. If you add all those days up, that's well over an inch of additional rain coming. Man, we're going to be wet! All that precipitation will fall on saturated ground, both here in the valley and up in the mountains. That means our streams will fill rapidly each time there's rain – not quite the flash floods we saw in California's deserts, but fast enough you could observe it over the course of just a few minutes. Our reservoirs are all chock-full and already overflowing prodigiously, so if these rains actually materialize I suspect we'll be in for some more flooding.

Next Tuesday a working steam locomotive will be stopping for a half hour in Cache Junction. That's just a short distance south of my brother's cabin, about a half hour's drive from our home. I'm hoping that Debbie and I can make it up there to see it! Here's more about the No. 844 locomotive, and its 2017 schedule.

It's now 10 days since Debbie had the hardware removed from her knee, and she's recovering nicely. There was a fairly big incision, and her healing process this time is all about that. She can already put most of her weight on her left knee (the one that was operated on). That incision was closed with staples, and they come out next Wednesday. Once that happens, Debbie should be unleashed on the physical therapy process. So far as we know now, she'll be doing that entirely on her own. We're going to ask next week whether she should have a professional physical therapist work with her on it...

And speaking of Debbie... She can't quite drive on her own yet, as she needs a bit of help getting in and out of the car. But she has a hair appointment today, down in Ogden. So I will be playing chauffeur for part of the day, to get her down there and back. :) We'll most likely eat out somewhere while we're down there – wouldn't want to miss the opportunity!

Thursday, April 20, 2017

Prehistoric computing...

Prehistoric computing... Ken Shirriff does his usual incredibly nerdy computing archaeology...

Tough choices...

Tough choices... The algorithmic trading server I worked on a few years ago used three different numeric types to represent quantities of money (all USD, in its case). We used scaled integers (32 bit scaled by 100) to represent stock prices (trades, bid, and ask) because that was known to be sufficient precision for the task and was fast because those integers are native types. We used scaled longs (64 bits scaled by 10000) for foreign exchange prices (also trades, bid, and ask) for the same reasons. Finally, we used BigDecimal (Java's built-in decimal with arbitrary precision) for aggregate calculations because it was the only built-in decimal type with enough precision, and because calculations like that were relatively infrequent and the performance penalty wasn't too bad.

But using several different types for the same logical purpose had very bad consequences for our system's reliability. Why? Because on every calculation involving money in the entire system, the programmer had to carefully think about the operands, make sure they were in the same value representation, convert them correctly if not, do the calculation, then convert the result (if necessary) to the desired type. At the same time, the programmer had to correctly anticipate and handle any possible overflow.

So how bad could that be? Here's a real-world example from that same algorithmic trading server. Periodically we would look at the bid/ask stack for particular stocks within 5% of the last trade price. For a highly liquid stock that might involve several thousand entries, where each entry was a price and a quantity. We needed to get the sum of the extended price for each entry, and then an average price for the ask stack and separately for the bid stack. The prices were in scaled integers, the quantities also in integers - but their product could exceed what could be held even in our scaled longs. So we had to convert each price and each quantity to a BigDecimal, then do the multiplication to get the extended price. Then we had to sum all those extended prices separately for the bid and ask stack. We could sum the quantities safely in a long, so we did. Then we had to convert that summed quantity to a BigDecimal so we could divide the sum of extended prices by the summed quantity. The result was a BigDecimal, but we needed a scaled integer for the result – so we had to convert it back. That means we needed to (very carefully!) check for overflow, and we also had to set the BigDecimal's rounding mode correctly. Nearly every step of that process had an error in it, due to some oversight on the programmer's part. We spent days tracking those errors down and fixing them. And that's just one of hundreds of similar calculations that one server was doing!

Ideally there would be one way to represent the quantity of money that would use scaled integers when feasible, and arbitrary precision numbers when it wasn't. It's certainly possible to have a single Java class with a single API that “wrapped” several internal representations. This is much easier if the instances of the class are immutable, which would be good design practice in any case. The basic rule for that class at construction time would be to choose the most performant representation that has the precision required. We could have used such a class on that algorithmic trading server, and that would have saved us many errors – but none of us thought of it at the time...

Note: this is the last post of this series on representing and calculating monetary values in Java. I've provided a link for the series at right. If perchance some other semi-coherent thought occurs to me, I reserve the right to add to this mess...

But using several different types for the same logical purpose had very bad consequences for our system's reliability. Why? Because on every calculation involving money in the entire system, the programmer had to carefully think about the operands, make sure they were in the same value representation, convert them correctly if not, do the calculation, then convert the result (if necessary) to the desired type. At the same time, the programmer had to correctly anticipate and handle any possible overflow.

So how bad could that be? Here's a real-world example from that same algorithmic trading server. Periodically we would look at the bid/ask stack for particular stocks within 5% of the last trade price. For a highly liquid stock that might involve several thousand entries, where each entry was a price and a quantity. We needed to get the sum of the extended price for each entry, and then an average price for the ask stack and separately for the bid stack. The prices were in scaled integers, the quantities also in integers - but their product could exceed what could be held even in our scaled longs. So we had to convert each price and each quantity to a BigDecimal, then do the multiplication to get the extended price. Then we had to sum all those extended prices separately for the bid and ask stack. We could sum the quantities safely in a long, so we did. Then we had to convert that summed quantity to a BigDecimal so we could divide the sum of extended prices by the summed quantity. The result was a BigDecimal, but we needed a scaled integer for the result – so we had to convert it back. That means we needed to (very carefully!) check for overflow, and we also had to set the BigDecimal's rounding mode correctly. Nearly every step of that process had an error in it, due to some oversight on the programmer's part. We spent days tracking those errors down and fixing them. And that's just one of hundreds of similar calculations that one server was doing!

Ideally there would be one way to represent the quantity of money that would use scaled integers when feasible, and arbitrary precision numbers when it wasn't. It's certainly possible to have a single Java class with a single API that “wrapped” several internal representations. This is much easier if the instances of the class are immutable, which would be good design practice in any case. The basic rule for that class at construction time would be to choose the most performant representation that has the precision required. We could have used such a class on that algorithmic trading server, and that would have saved us many errors – but none of us thought of it at the time...

Note: this is the last post of this series on representing and calculating monetary values in Java. I've provided a link for the series at right. If perchance some other semi-coherent thought occurs to me, I reserve the right to add to this mess...

Wednesday, April 19, 2017

Storing and transmitting monetary values...

Storing and transmitting monetary values... Most financial applications these days are not standalone applications, but rather components of much larger and more complicated systems. In a past life I was the CTO of a firm that made stock and option trading software, and I once made a map of all the systems that our servers communicated with. There were about a dozen separate machines in our own server room that talked to each other. There were also over two hundred other applications (in over thirty other organizations) that our servers talked to. Those communications paths used a dozen or so different protocols, not counting minor variations. In addition to all these connections, our servers also stored financial information: in memory, on disk, and in logs. That system was not an outlier – I've worked on dozens of other systems just as complex, or even more so.

This complexity has lots of implications when it comes to representing monetary values. What jumps right out to anyone examining all these needs is that there is no “one way” to represent a monetary value that is correct, best, or optimal.

Often the representation has to match an existing standard. For instance, perhaps the monetary value must be in the form of an ASCII string, like this:

When storing or transmitting large numbers of values, often a compact (or compressed) encoding is essential. I once worked on a server that did algorithmic trading (that is, the program decided what stocks to buy and sell). This server needed to store (both on disk and in memory) huge tables of stock pricing data. On busy days this could amount to several hundred million entries. The original design of the server represented the monetary values in a fixed length binary structure that occupied 22 bytes, so on busy days we were storing a couple of gigabytes of data – and moving that data between disk, memory, and CPU cache. We observed that this was a bottleneck in our code, so we came up with a variable-length encoding that averaged just 7.5 bytes per value. We rolled our own on this one, as the numeric representation we were using didn't have a compact encoding. The performance impact was stunning, much more than the 3x you might naively expect. The biggest impact came from fewer CPU cache misses, the second biggest from faster encoding/decoding time. The latter we didn't expect at all. The real lesson for me: compact encodings are very valuable!

Databases present another challenge for representing monetary values. The numeric column types available often can be specified with the precision required – but the API will require converting the value to or from a string or an unlimited precision value (such as BigDecimal). Sometimes the values don't need to be queried, but rather simply stored, as key-accessed blobs. In those cases, a compact encoding is valuable, especially for large tables of values accessed as a whole.

From all this we can derive another requirement for a monetary value representation: flexibility of encodings. There should be a variety of supported, standard encodings including (especially) a compact, variable-length binary encoding, a fixed-length binary encoding. There should be support for conversions to and from unlimited precision numbers as well as native types. There should be support for conversions to and from strings. Also very important, for flexibility: support for adding encodings – because there's no way to anticipate all the strange things the world will require of you...

This complexity has lots of implications when it comes to representing monetary values. What jumps right out to anyone examining all these needs is that there is no “one way” to represent a monetary value that is correct, best, or optimal.

Often the representation has to match an existing standard. For instance, perhaps the monetary value must be in the form of an ASCII string, like this:

$4532.97. The standard might specify that it be in a fixed-length field, with spaces padding to the left as required. Or the standard might specify that the value be in XML, encoded in UTF-16, with a tag of “AMT” for the amount, and “CUR” for the three-character ISO currency code. Some older protocols have specified binary formats, with a numeric code for the currency and packed BCD for the value. I could go on and on with these, because many clever and/or crazy people have devoted a great amount of time to inventing all these schemes.When storing or transmitting large numbers of values, often a compact (or compressed) encoding is essential. I once worked on a server that did algorithmic trading (that is, the program decided what stocks to buy and sell). This server needed to store (both on disk and in memory) huge tables of stock pricing data. On busy days this could amount to several hundred million entries. The original design of the server represented the monetary values in a fixed length binary structure that occupied 22 bytes, so on busy days we were storing a couple of gigabytes of data – and moving that data between disk, memory, and CPU cache. We observed that this was a bottleneck in our code, so we came up with a variable-length encoding that averaged just 7.5 bytes per value. We rolled our own on this one, as the numeric representation we were using didn't have a compact encoding. The performance impact was stunning, much more than the 3x you might naively expect. The biggest impact came from fewer CPU cache misses, the second biggest from faster encoding/decoding time. The latter we didn't expect at all. The real lesson for me: compact encodings are very valuable!

Databases present another challenge for representing monetary values. The numeric column types available often can be specified with the precision required – but the API will require converting the value to or from a string or an unlimited precision value (such as BigDecimal). Sometimes the values don't need to be queried, but rather simply stored, as key-accessed blobs. In those cases, a compact encoding is valuable, especially for large tables of values accessed as a whole.