The Curiosity rover currently exploring Mars has an interesting image manipulation capability on board that is effectively a form of data compression, albeit a very unconventional one.

Since early in the days of digital cameras, photographers have realized that there are ways to combine multiple digital images of the same scene to provide a better composite image. One of the first uses of this technique was to increase the camera's effective depth-of-field with a technique called focus stacking or focus bracketing. The idea is simple enough: you take several images of precisely the same scene, but each with different focus settings. Then you combine the parts of each image that are in focus, so that the entire resulting image is in focus.

Focus stacking is particularly useful in macro (close-up) photography, because the lenses used have very shallow depth of field. Digital photographers routinely use focus stacking today, generally with software such as Photoshop. You might start with images taken at 4 or 5 focus settings, then combine them carefully to get one image as a result. This is especially useful with studio or product photography, where your subject is stationary.

From the perspective of data size, this is a very inefficient process. To get a single final image, you have to move several full size images from the camera to your focus stacking software. For an earthbound digital photographer, that's a few seconds of time as the memory card gets read. For Curiosity, millions of miles away on Mars, it's an entirely different story – it has to send all those images over a very slow communications link.

Now Curiosity has a macro camera (the MAHLI imager), and it has a major depth-of-field challenge. It's designed to capture images of rocks for geologists to study, and those rocks generally have surfaces that are far from flat. But sending multiple images for focus stacking over that slow data link is a more bandwidth than they'd like to use. The solution: implement focus stacking right on the Curiosity rover, so that only the final, all-in-focus image has to be transmitted. The MAHLI team calls this technique “focus-merge”

How do they accomplish this feat, normally done with human manipulation?

First, the MAHLI team built an in-focus sensing capability into the camera that extends across the entire image. On every image, the camera knows which pixels are in focus and which are not. This is similar to (but more extensive than) the multiple-areas autofocus capability of most high-end digital SLRs today. This in-focus data is stored with each image taken.

Then for any given rock that MAHLI is imaging, they'll take several photos (2 to 8, depending on the rock's depth variation) at different focus settings. Software on board Curiosity then scans all the images pixel-by-pixel, choosing the image with the best focus for each pixel. It also creates a visualization map showing the image selected at each pixel (using 2 to 8 gray levels, with blacker being more distant).

To anyone who has done much macro photography (as I have, with wildflowers), the result approximates magic: extreme close-up photos with essentially infinite depth-of-field. I really can't use this technique with wildflowers, because the darned things are highly unlikely to be still at microscopic scales. But I can envy the result nonetheless.

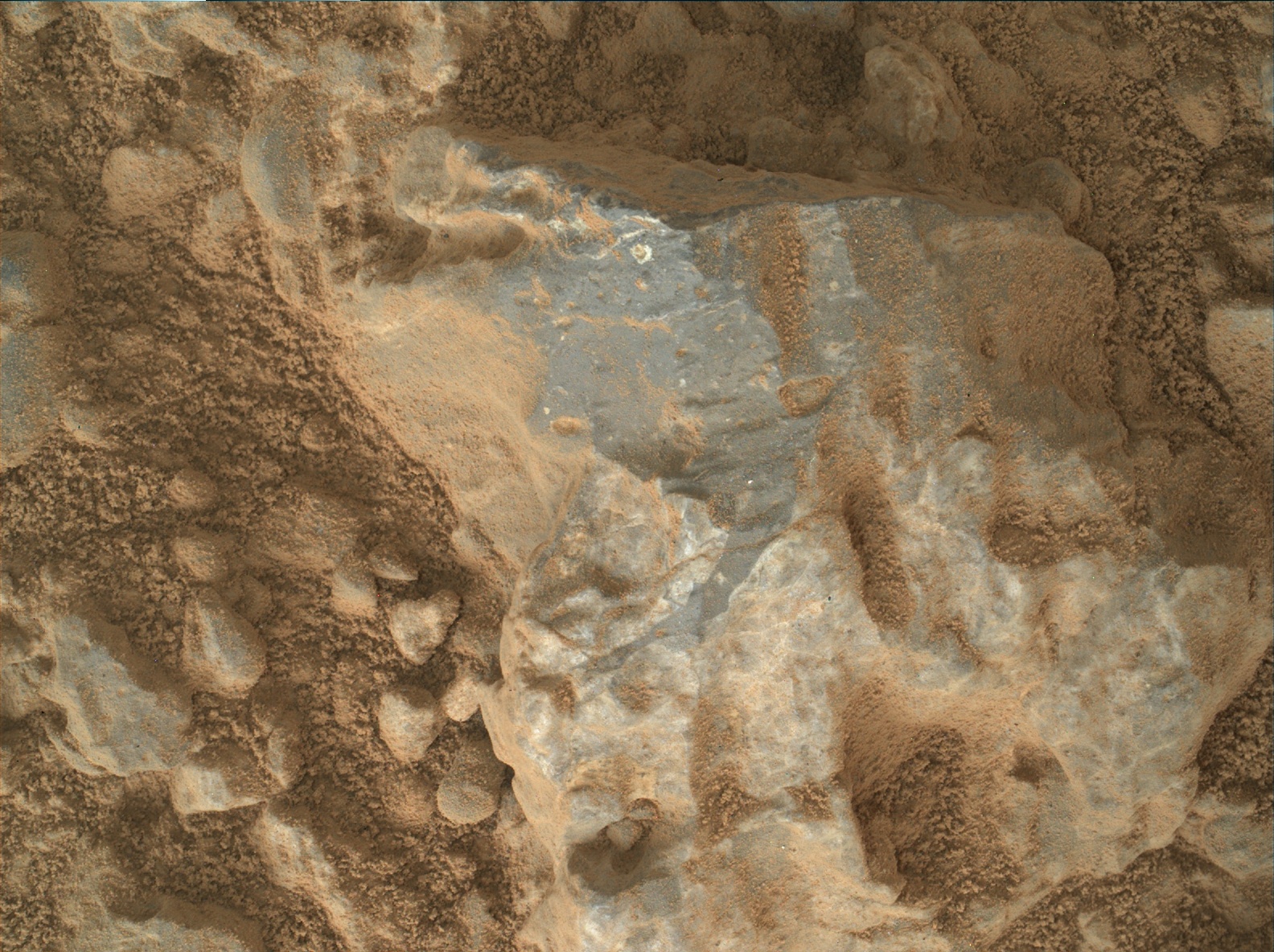

Here's a recent Curiosity focus-merge image of a rock's surface (on top) and it's matching focus map (at bottom):

No comments:

Post a Comment